Newer

Older

TinyNets is stateless, resilient multipath message passing for networked control systems.

See a longer report on the work (for 6.829) [here](https://gitlab.cba.mit.edu/jakeread/tinynets/raw/master/document/6-829_project-jr_dk_ns_pw_Tiny_Nets_finalReport.pdf).

We developed TinyNet(s) in response to a lack of purpose-built networking solutions for realtime (or 'just in time') embedded systems. I.E. Robotics, Avionics, and Manufacturing devices. NCS are strange (messages are typically very small, determinism is more important than throughput, hardware resources are limited) and the market is small enough that no technology exists for the niche.

Most NCS use Switched Ethernet! Critically, Ethernet on its own does not allow multipath routing due to the Spanning Tree Protocol<sup>1</sup>. However, we see Multipath as a must-have for resilient message passing, and a driving strategy for bringing determinism to a network.

These problems exist in Datacenters. Multipathing in a Datacenter is achieved by adding Network Controllers to the infrastructure that implement some other routing scheme<sup>1</sup> by remotely manipulating routers' port-forwarding tables. However, these Network Controllers must know *the entire state* of the network, and take time - between 200ms and 10s - to re-configure forwarding schemes. A sudden 200ms increase in the RTT of a packet is a no-go for embedded systems, where control loops rely on sensor data, say, once every 200*us* to remain stable.

TinyNet routers maintain a port forwarding table and update one-another on their current queue sizes. With this small amount of data, each router makes a semi-intelligent port-forwarding decision for each flow of data. Essentially, the job of the Network Controller is distributed into each router - and routers use *local* information rather than *global* information to perform a distributed 'greedy' path-planning algorithm. This is a simple Distance-Vector scheme.

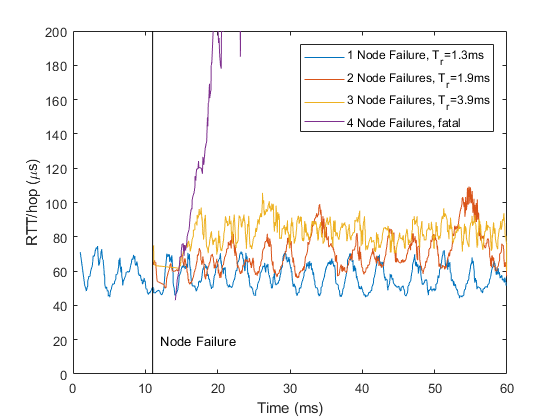

We show that TinyNets recovers fairly well from the loss of nodes:

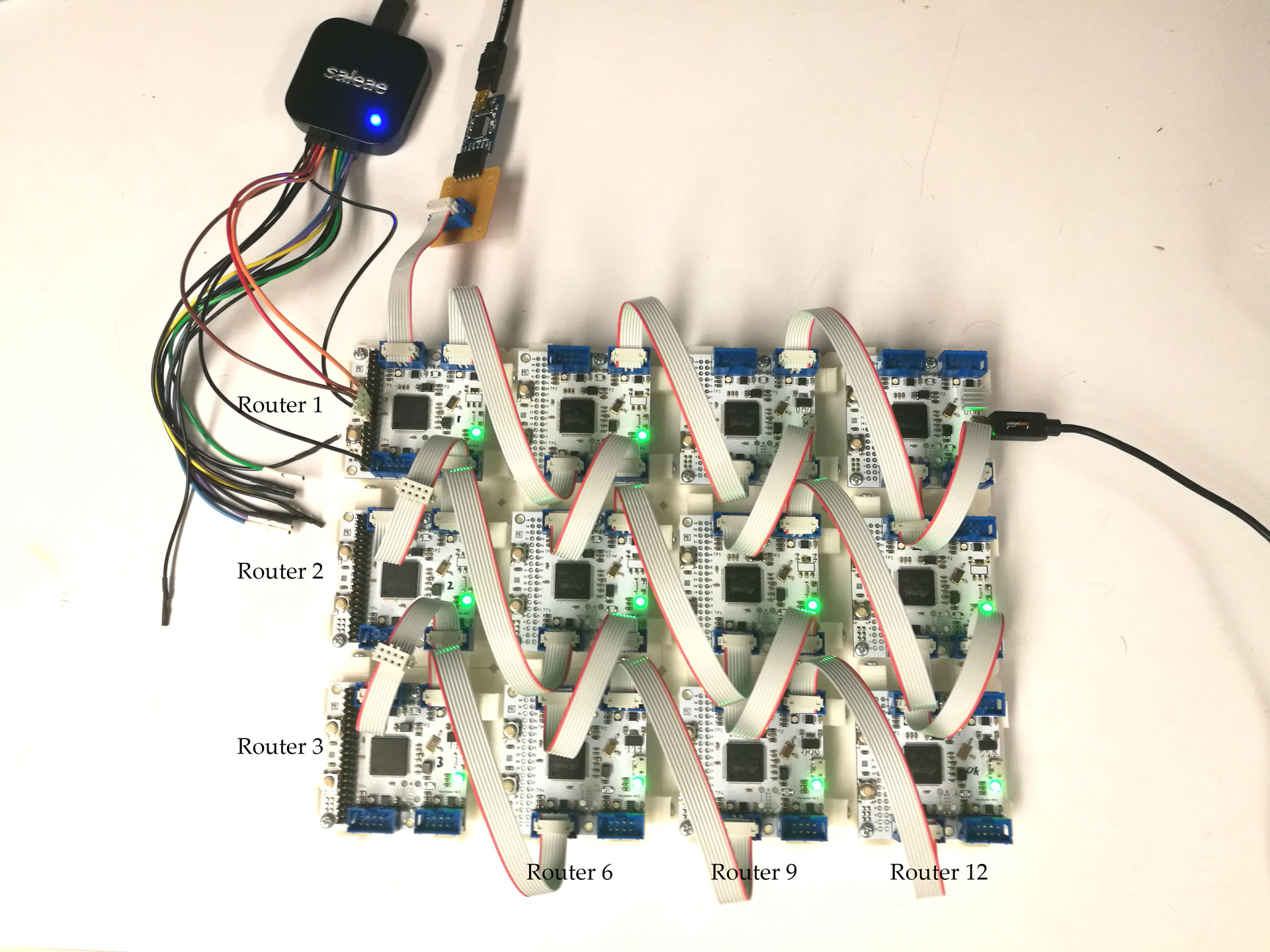

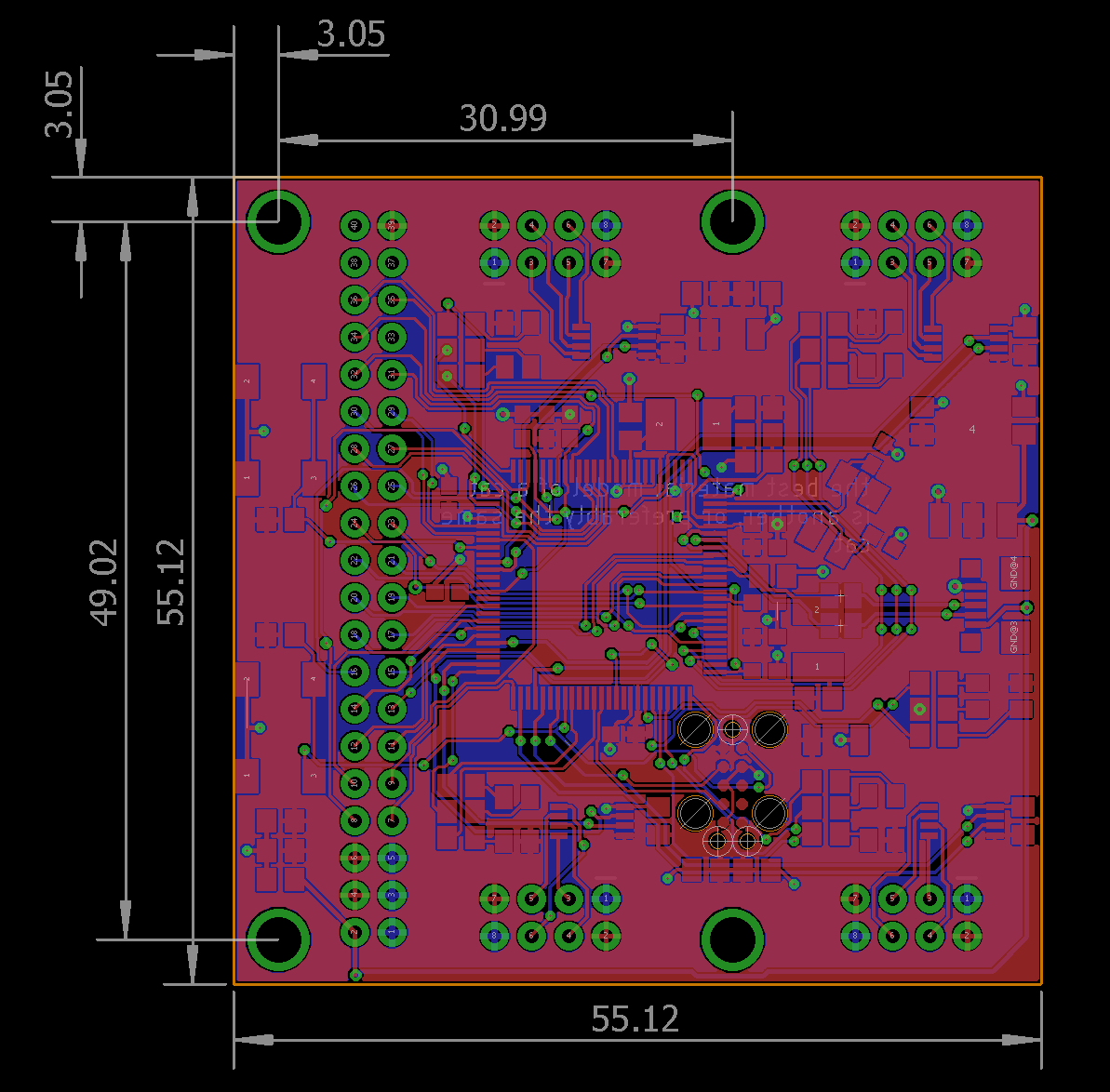

In addition, we developed TinyNets to run on small microcontrollers, that can simulatenously be used to do 'other stuff' - i.e. motor control, sensor reading, etc.

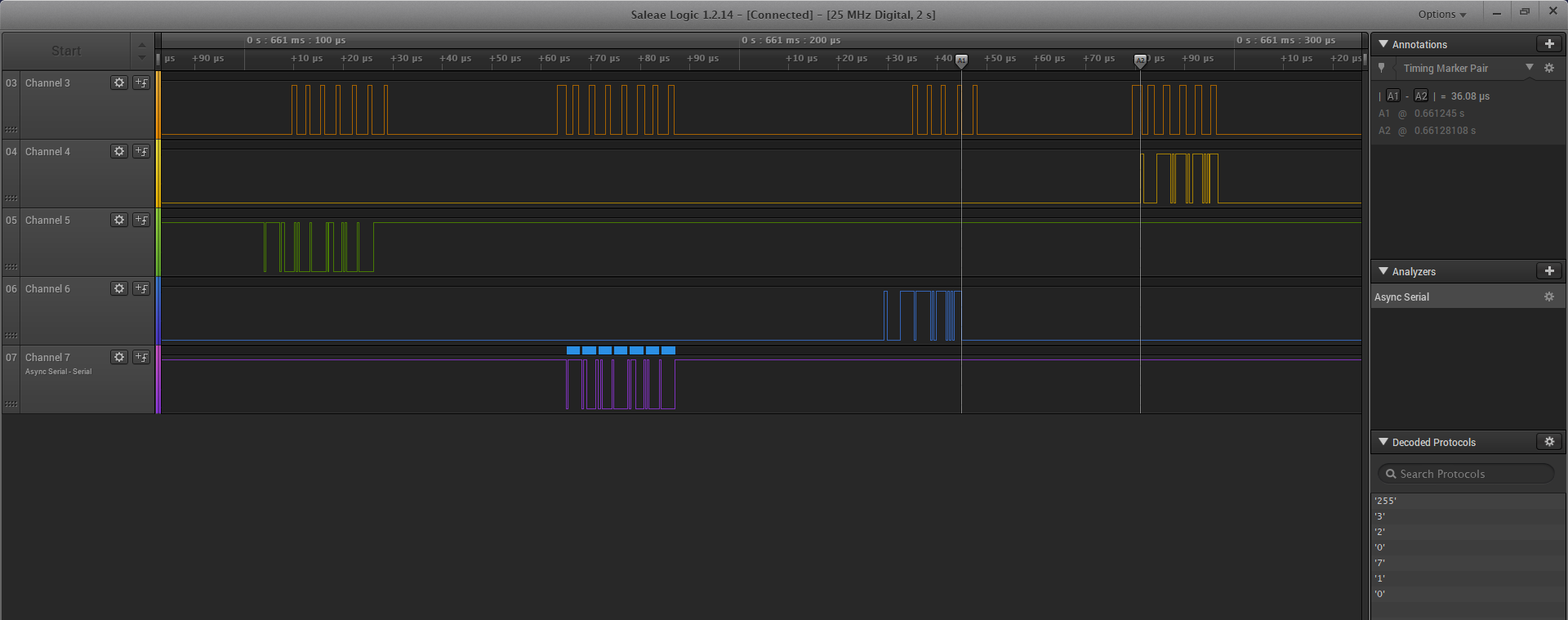

On the hardware we tested (a 300MHz embedded uc) we record a packet processing time<sup>3</sup> of about 37us.

With a bitrate of about ~2MBPS we do a total per-hop time of ~ 100us. This means that between two nodes we can run a 10kHz control loop.

1. A few: TRILL, OSPF, ECMP, SPB

2. STP is necessary to avoid endless message looping on flood packets.

3. The time it takes for a microcontroller to decide where to forward it's packet.

We develop a router, protocol and implementation of a network that:

- Implements a Multipath Distance-Vector Routing Protocol

- Does Realtime Route Selection

- Does Automatic, Convergence-free Route Discovery and Optimization

- Can be arbitrarily implemented in software on numerous microcontrollers

- 10-bit address (1024 Unique in System, scalable by systems designers at the cost of larger packet size)

- Addresses are assigned in software (Ethernet: Hardware Addresses)

- Can be location-based (e.g. first five MSBs correspond to x, last five correspond to y)

| Type | 8 Bits | 10 Bits | 6 Bits | 10 Bits | 6 Bits | N Bytes | CRC |

| --- | --- | --- | --- | --- | --- | --- | --- |

| Standard: | 255 | Dest. | Hop Count | Src. | # Bytes | Payload … | CRC |

| ACK: | 253 | Dest. | Hop Count | Src. | x | x | CRC |

| Buffer Length: | [0 - 251] | x | x | x | x | x | x |

* previously-flooded Standard Packets have start delimiter 254

* previously-flooded Acks have start delimiter 252

if the packet is not a buffer update:

update the LUT using packet src. and hop count

if packet is standard:

if I am destination:

process data in packet

if LUT has destination address:

send packet to port which minimizes C(hops, buffer) = hops + \lambda*buffer over all ports

else:

send packet to all ports as standard flood

else:

increment hop count

if LUT has destination address:

send packet to port which minimizes C(hops, buffer) = hops + \lambda*buffer over all ports

else:

send packet to all ports as ack flood

remove packet src. from LUT at that port if it exists

if I have not yet seen this flood:

if I am destination:

process data in packet

reply with ACK

else:

increment hop count

if LUT has destination address:

send packet to port which minimizes C(hops, buffer) = hops + \lambda*buffer as standard packet

else:

send packet to all ports except one from which it was received

remove packet src. from LUT at that port if it exists

send packet to port which minimizes C(hops, buffer) = hops + \lambda*buffer as standard ACK

send packet to all ports except one from which it was received

The routing table (or lookup table, LUT) consists of rows of:

| Destination | Seen on Ports | Min. Hopcount Recorded on Port | Port buffer size |

This is different from standard Ethernet routing tables:

| Ports | Destinations seen on Port |

Because it includes the current port buffer size and number of hops as part of the table entry, allowing for a more robust cost function for use in the path planning algorithm.

## Buffer Depth Updates

Send buffer depth on all ports every q seconds, and every time a packet leaves or arrives

## Announcements

New arrivals to network do not announce, they simply begin transmitting. Their addresses are recoreded in surrounding switches' tables on their first packet-out.

## Withdrawals

Buffer Depth Updates are Periodic as well as event-based (on buffer-depth change). When no BDU is heard within a 250ms (or other setting) window, the node is considered withdrawn.

Presents a good option for wired routing over robust networks, with some complexity pushed into the nodes. Only really advantageous when we want to be able to re-route messages upstream in order to move around bottlenecks. TN does load-balancing without thinking about it... other approaches would require some implementation. Napoleon's Messenger.

If we include flows, needs per-flow, not per-packet, routing - if we are going to hop about per buffers etc.

Wants a C API for packetizing streams / flows.

Still some question about duplicate message arrivals after message? OR don't packetize flows - add source -> destination 0-255 counter, only take next in this series.

TN wins over the blissfully simple APA when we want a *very big* network, say, want 1,000,000 objects to individually address 1,000,000 objects. Here we also need to introduce heirarchichal addressing.

Critically, measuring APA vs. TN - when does multi-pathing become really important?